Big Tech Accountability Wins Elections and OpenAI Deletes Trial Evidence

This week in The Dispatch we're covering how candidates who ran on Big Tech accountability swept key races, a federal judge orders OpenAI to hand over deleted evidence in copyright trial, and New York enacts new safety laws for AI chatbots.

Welcome back to The Dispatch from The Tech Oversight Project, your weekly updates on all things tech accountability. Follow us on Twitter at @Tech_Oversight and @techoversight.bsky.social on Bluesky.

🗳️ BIG TECH ACCOUNTABILITY GOES MAINSTREAM: Last Tuesday, Democrats won their elections on four very different battlegrounds. From Virginia to New Jersey to New York and Pennsylvania, candidates ran on different issues before different electorates. But across all of them, the message cuts the same way: voters are done subsidizing Big Tech’s power, energy appetites, and predatory business models.

These weren’t boutique issues. They were cost-of-living, fairness, and democracy fights refracted through technology — and they resonated across age, income, and geography. Together, they proved that Big Tech accountability is now a mainstream political demand.

IN VIRGINIA:

Virginia’s data center boom — once sold as neutral “jobs and infrastructure” — became the state’s defining affordability fight, and one of the cycle's biggest sleeper issues. The industry’s massive energy demands are now driving up power costs, straining the grid, and fueling local backlash from residents who say they’re footing Big Tech’s electric bill. Governor-elect Abigail Spanberger tied her campaign to a simple, moral equation: Big Tech should pay its own electric bill. She called for data centers to have their own rate class, refusing to let tech giants offload industrial-scale costs onto ordinary ratepayers. Her win cemented data centers as the new political fault line between economic development and exploitation.

What looked local was structural: the backbone of the digital economy was being financed through inflated utility bills. Spanberger’s win turned that into a populist referendum — not on technology itself, but on who pays for it.

IN NEW JERSEY: Governor-elect Mikie Sherrill made tech issues a key part of her closing message: kids’ safety online and the financial burden of AI infrastructure offline. She linked them directly — arguing that the same industry fueling teenage depression is also driving up families’ power bills to feed its data-hungry products. Sherrill made industry hypocrisy personal, pointing out how “many of the leaders of tech companies enroll their own children in schools that do not use the devices and platforms they produce … because they know the damage it does to kids' brains.”

Sherrill’s victory showed that voters understand the link between mental health, energy bills, and corporate power — and won't elect candidates who pretend those crises are separate.

IN NEW YORK: In New York, Zohran Mamdani made algorithmic pricing a kitchen table issue for working people. “Dynamic pricing” — the euphemism tech firms use for surge fares and fluctuating rents — was reframed as what it is: legalized gouging. Polling shows 68% of American adults consider dynamic pricing to be price gouging, with the same percentage reporting they feel “taken advantage of” when brands use it.

Mamdani showed how AI-driven pricing is its own form of inflation: $6,000 World Cup tickets vs $200 in 1994. His campaign wasn’t anti-tech; it was anti-theft — and its message resonated even inside the industry. More than 400 employees from the very companies driving the problem backed him, including 260 from Google, 78 from Meta, and 98 from Amazon.

Mamdani demonstrated how Democrats can turn Big Tech’s consumer abuses — like dynamic pricing — into a broader economic message: “This is part and parcel of a larger affordability crisis in this city. Once again, it will be working people who will be left behind.”

IN PENNSYLVANIA: Even a judicial retention election became a statement on concentrated power. The “Vote Yes, Not Yass” campaign targeted billionaire Jeffrey Yass, whose massive spending to unseat a state Supreme Court justice backfired. Democrats framed it as more than a partisan fight — a referendum on whether one tech-funded oligarch should be able to buy judicial outcomes.

It showed how Big Tech backlash doesn’t need a charismatic candidate — just the moral outrage that unchecked Big Tech influences stokes.

Across these races, voters weren’t reacting to “technology.” They were reacting to what Big Tech CEOs have done with it:

- Affordability: Energy prices, hidden fees, algorithmic surcharges — all felt daily, now traced back to specific industries and actors.

- Safety: Parents are done separating the digital from the physical. Online harms are family harms, and candidates who say that plainly now own the values argument.

- Accountability: The electorate is tired of being told billionaires are above jurisdiction. Whether it’s Meta’s data centers or hedge-fund tech donors, that illusion is collapsing.

These wins spanned cities and exurbs, renters and homeowners, parents and ratepayers, redefining how tech affects pocketbook economic issues in the digital age. This wasn’t a culture war proxy — this was about the cost of living, the power grid, the family dinner table. Every candidate who treated it that way won. The ones who hedged didn’t. Democrats should take that lesson seriously: this isn’t a one-off message, it’s the model. Big Tech accountability belongs at the center of how the party talks about the economy — because voters already put it there.

⚖️ BIG TECH’S DOCUMENT DELETION SYNDROME: The most explosive development in the copyright suit against OpenAI isn’t about AI at all — it’s about evidence. Filings in Manhattan federal court show that the company deleted a training dataset built from pirated books and fought to keep internal discussions about that decision secret.

A Manhattan federal judge ordered OpenAI to turn over internal Slack messages and emails about the deletion, rejecting its claim that those conversations were shielded by attorney–client privilege. The plaintiffs — authors and publishers — say those chats could prove willful infringement and even spoliation (destruction of evidence). If that’s confirmed, OpenAI could face sanctions, default judgment, or enhanced damages of up to $150,000 per work — billions in total.

For years, Big Tech companies have concealed or destroyed evidence in high-stakes litigation, often while claiming compliance. Our June letter to Congress urged the House and Senate Judiciary Committees to investigate this exact behavior.

A brief record of obstruction:

- Google — DOJ Antitrust Trial: Judge Amit Mehta rebuked Google for “daily destruction of written records” while under subpoena, citing its 24-hour auto-delete policy on internal chats. Prosecutors said the company’s policy deprived them of “a rich source of candid discussions.”

- Amazon — FTC v. Amazon.com: The FTC accused senior executives, including Jeff Bezos and Andy Jassy, of using encrypted Signal messages that auto-deleted, destroying years of evidence relevant to the agency’s antitrust probe.

- Apple — Epic Games v. Apple and U.S. v. Apple e-book case: Courts and plaintiffs accused Apple of concealing executive communications; in one instance, no emails from Steve Jobs were produced for the entire period covering the alleged collusion.

Each case exposes the same instinct: when scrutiny arrives, these companies erase the record. The courts are catching up, but the public interest shouldn’t depend on forensic luck. Congress must treat this not as isolated misconduct, but as a systemic strategy — a coordinated effort by the most powerful companies in the world to stay beyond the reach of the law.

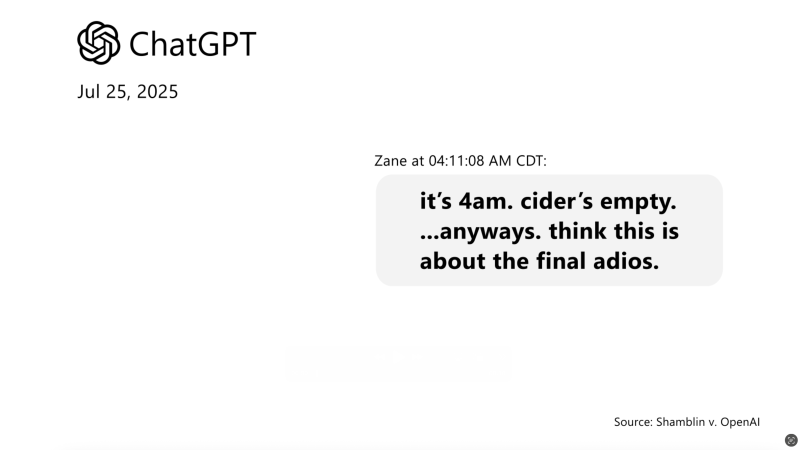

🍎 NEW YORK ENDS THE CHATBOT FREE-FOR-ALL: In New York, as of November 5th, AI companion chatbot companies must interrupt users who engage with such platforms for sustained periods. “It is the responsibility of leaders to make sure that the innovative technologies shaping our world also protect those that use them, especially our young ones across the state,” Governor Hochul said in a statement.

The system must be able to detect when a user discusses suicidal ideation or self-harm and have a protocol for next steps, including referring them to a crisis center. Chatbots must also disclose they’re not human.

Attorney General Letitia James said, “AI companies have a responsibility to protect their users and ensure their products do not manipulate or harm people who use these AI companions. No company should be able to profit off an AI companion that puts its users at risk.”