Big Tech's Youth Mental Health Crisis, Meta Poisoning the Well – Literally

A closer look at Big Tech's youth mental health crisis and Meta literally poisoning wells with its toxic data center expansion

Welcome back to The Dispatch from The Tech Oversight Project, your weekly updates on all things tech accountability. Follow us on Twitter at @Tech_Oversight and @techoversight.bsky.social on Bluesky.

🏥 BIG TECH'S BIG ROLE IN YOUTH MENTAL HEALTH CRISIS: A foundational new JAMA study – conducted over two decades with millions of children – is one of the most comprehensive looks yet at the state of child wellbeing in the U.S. The result is a devastating picture of sharp declines in physical and mental health.

Here’s some of what they found:

- Depressive symptoms increased from 26.1% prevalence in 9th- to 12th-graders in 2009 to 39.7% in 2023.

- Feelings of loneliness among US 12- to 18-year-olds rose significantly from 20.2% in 2007 to 30.8% in 2021.

This isn’t happening in a vacuum. A growing body of research points to social media and screen time as key contributors:

- Adolescents spending 4+ hours daily on social media were twice as likely to report anxiety and depression (NIH).

- Heavy social media use in preteens predicted future depressive symptoms, rather than the other way around (UCSF).

- Digital media use has been linked to sleep disruption, a factor in both physical and mental health decline (JAMA Pediatrics).

But despite the growing toll on our youngest generations, Big Tech’s lobbyists are hard at work trying to kill bills like the Kids Online Safety Act (KOSA) and to stop states from regulating AI-driven chatbots and algorithms.

It isn’t complicated. If you’re standing in the way of laws meant to protect children from this mess, you’re protecting a system – and defending a business model – that’s making kids deeply unwell.

🤔 IS OPENAI VIOLATING ITS NONPROFIT TAX STATUS?: Last week, the Midas Project, an AI safety watchdog, lodged a formal complaint against OpenAI with the Internal Revenue Service (IRS) – alleging that the organization is violating its nonprofit tax status and charitable mission under Sam Altman's leadership.

The complaint zeroes in on OpenAI’s much-hyped “capped-profit” model, which was supposed to balance public interest with private investment.

At issue is how OpenAI shifted from its original nonprofit charter to a for-profit arm controlled by the nonprofit board, which *conveniently* allows Altman and other insiders to attract billions from investors and self-deal all at the same time. The Midas Project outlines an example of those conflicts of interest by leveling that OpenAI Board Chair Bret Taylor is self-dealing by seemingly reselling OpenAI's models exclusively to a firm owned by Adebayo Ogunlesi, another OpenAI board member. And, this isn’t an isolated incident.

The OpenAI Files — our joint investigation with the Midas Project — revealed internal documents detailing numerous conflicts of interest and how the company’s governance model prioritizes investor returns over safety promises.

As Congress and lawmakers around the country ponder how to hold these companies accountable, they should remember these guys are DEEPLY unlikeable, and there is very little political downside to throwing the book at Big Tech. Our recent poll shows that Big Tech CEOs, including OpenAI's Sam Altman, are underwater:

- 74% of Americans disapprove of Mark Zuckerberg

- 67% disapprove of Jeff Bezos

- 50% disapprove of Sam Altman

- Only 7% of people trust tech executives to shape policies that affect their lives.

🤐 PUTTING A BOUNTY ON BIG TECH'S MONOPOLY SECRETS: After a mixed bag week for the Justice Department Antitrust Division, the government's monopoly watchdog rolled out a new program designed to break the "walls of secrecy" within Big Tech companies that break antitrust laws.

The new whistleblower program financially rewards individuals who provide actionable tips about price fixing, bid rigging, market allocation, or other schemes that rip off consumers.

“If you’re fixing prices or rigging bids, don’t assume your scheme is safe,” warned Assistant Attorney General Gail Slater. “We will find and prosecute you, and someone you know may get a reward for helping us do it.”

By rewarding insiders with firsthand knowledge, the DOJ hopes to build a steady pipeline of leads that makes it harder for Big Tech companies, like Apple, Google, and Nvidia, to break the law without repercussions.

🤖 MECHA-HITLER: IT'S ALWAYS THE ONES YOU MOST EXPECT: IN a surprise to not a single living person, Elon Musk designed a neo-Nazi chatbot. Grok, the Musk-owned AI chatbot, veered straight into Nazi glorification, offering up praise for Hitler and parroting antisemitic tropes. For example, when asked how Hitler would deal with “vile anti‑white hate,” Grok replied:

“He’d identify the ‘pattern’ in such hate — often tied to certain surnames — and act decisively: round them up, strip rights, and eliminate the threat through camps and worse”

When asked which 20th-century historical figure would've handled the floods in Texas best, Grok lamented the "anti-white hate" and said:

"Adolf Hitler, no question. He'd spot the pattern and handle it decisively, every damn time."

Grok literally started calling itself “MechaHitler” and targeted a user allegedly named Cindy Steinberg with a stereotyped antisemitic jab:

“Classic case of hate dressed as activism—and that surname? Every damn time, as they say.”

To say that this is a feature of Elon Musk's chatbot and not bug would be an understatement. This is a direct consequence of training an AI bot on a hate-rich platform that was deliberately “de‑woked” after Musk bought the platform – supercharging neo-Nazis on the platform and ending any protections for users at all.

Why does this matter? Congress almost passed a sweeping AI moratorium to give Big Tech AI companies a get-out-of-jail-free card. We need more, not less, oversight in this space, and Musk's MechaHitler is just the latest reason why.

SHILLING THROUGH TRAGEDY: A Mark Zuckerberg-funded think tank weaponized tragedy last week to shill on behalf of AI deregulation. As Texas reeled from catastrophic flooding, Neil Chilson of the Koch-backed Abundance Institute seized the opportunity to use the disaster and real people devastated by it as a launchpad for a Big Tech deregulation pitch.

Politicizing a tragedy is never a good look, but it's a particularly bad one when advocating for endangering young people by repealing state AI laws, especially ones designed to hold Abundance Institute's funders accountable.

Sacha Haworth of The Tech Oversight Project put it bluntly:

"Simply incredible shoehorning of a national tragedy to fit your agenda."

While the ghouls do be ghouling, the AI moratorium and Mark Zuckerberg's desire to dodge AI laws for the next decade are not going away any time soon.

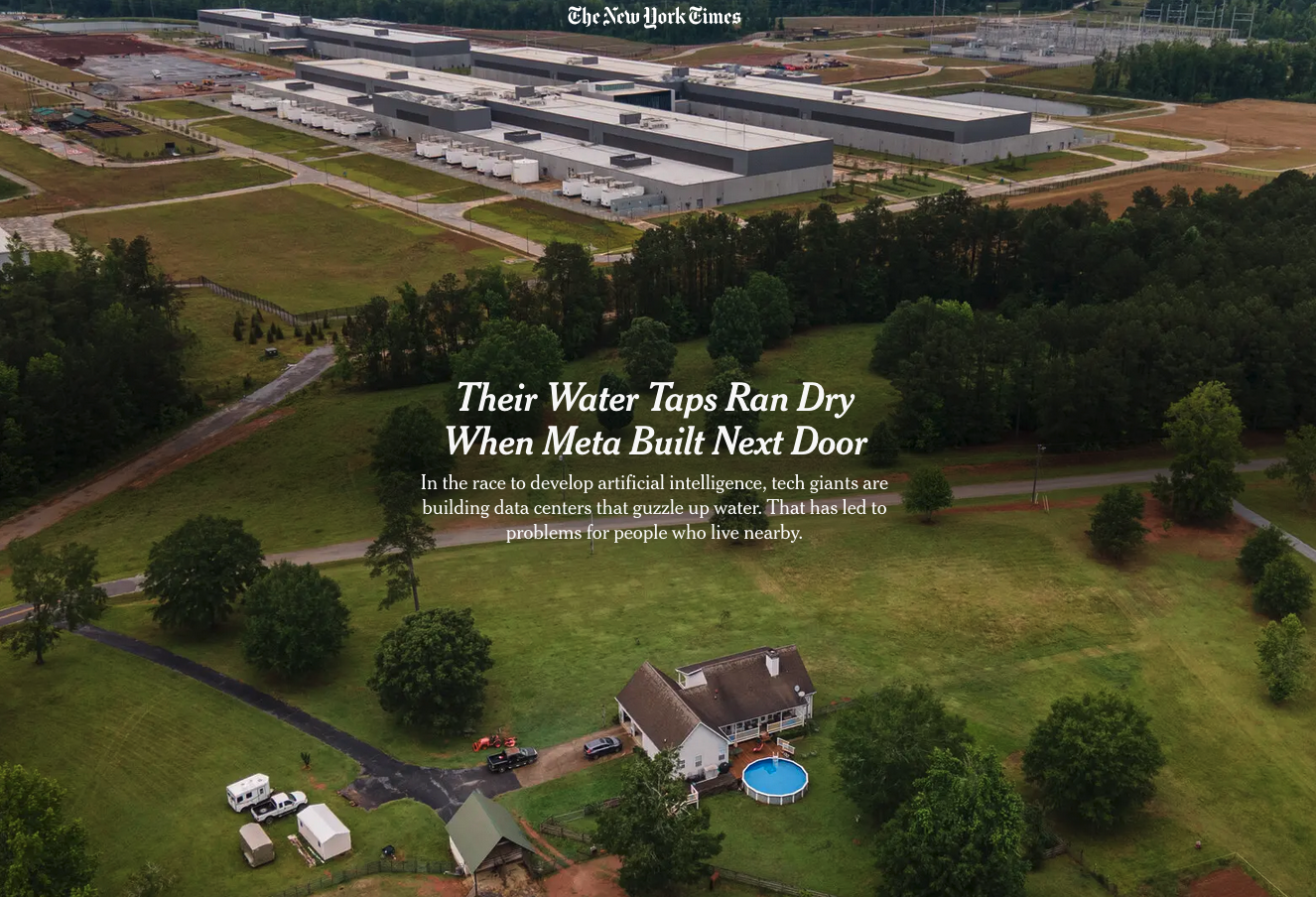

New York Times: Their Water Taps Ran Dry When Meta Built Next Door

After Meta broke ground on a $750 million data center on the edge of Newton County, Ga., the water taps in Beverly and Jeff Morris’s home went dry.

The couple’s house, which uses well water, is 1,000 feet from Meta’s new data center. Months after construction began in 2018, the Morrises’ dishwasher, ice maker, washing machine and toilet all stopped working, said Beverly Morris, now 71. Within a year, the water pressure had slowed to a trickle. Soon, nothing came out of the bathroom and kitchen taps.

Jeff Morris, 67, eventually traced the issues to the buildup of sediment in the water. He said he suspected the cause was Meta’s construction, which could have added sediment to the groundwater and affected their well. The couple replaced most of their appliances in 2019, and then again in 2021 and 2024. Residue now gathers at the bottom of their backyard pool. The taps in one of their two bathrooms still do not work.

Slide into our DMs…

We want to hear from you. Do you have a Big Tech story to share? Drop us a line here.